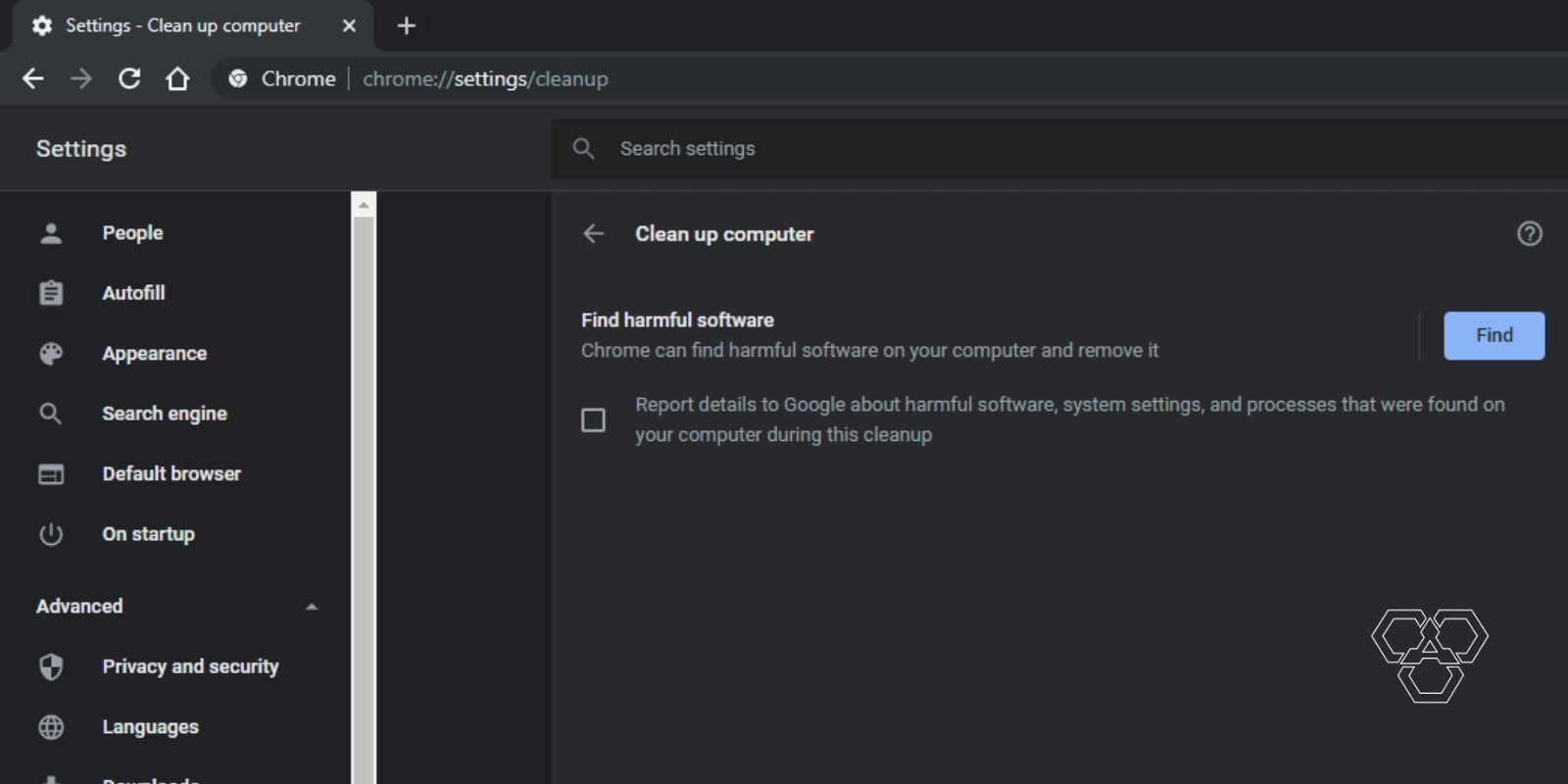

The second task starting in line 20 is created by the ActionFunc() function, a tool to structure new customized tasks in chromedp. The Navigate task starting on line 18 directs the browser to the Linux Magazine website. The Tasks structure starting on line 17 defines a set of actions that you want the connected Chrome browser to perform, using the DevTools protocol. This function can be called by the main program later to signal to another (maybe deeply) nested part of the program that it is time to clean up, because doors are being closed. A context constructor in Go returns a cancel() function. Listing 1 creates a new chromedp context in line 13 and gives the constructor a standard Go background context, which is an auxiliary construct for controlling Go routines and subroutines. The whole thing runs at the command line if you typeĠ5 emu "/chromedp/cdproto/emulation"ĥ3 err = ioutil.WriteFile("screenshot.png",įigure 1: A screenshot of the Linux Magazine cover page in a remote-controlled Chrome instance. The Go program in Listing 1 launches the Chrome browser, points it at the Linux Magazine web page, and then takes a screenshot of the retrieved content. See the site's permission page and consult the applicable laws for your jurisdiction. I'll take a look at some screen-scraping techniques in this article, but keep in mind that many websites have licenses that prohibit screen scraping. Go enthusiasts can now write their unit tests and scraper programs natively in their favorite language. Google's Chrome browser additionally implements the DevTools protocol, which does similar things, and the chromedp project on GitHub defines a Go library based on it.

The tool speaks the Selenium protocol, which is supported by all standard browsers, to get things moving. One elegant workaround is for the scraper program to navigate a real browser to the desired web page and to inquire later about the content currently displayed.įor years, developers have been using the Java Selenium suite for fully automated unit tests for Web user interfaces (UIs). In fact, even a scraping framework like Colly would fail here, because it does not support JavaScript and does not know the browser's DOM (Document Object Model), upon which the web flow relies. The problem is that state-of-the-art websites are teeming with reactive design and dynamic content that only appears when a bona fide, JavaScript-enabled web browser points to it.įor example, if you wanted to write a screen scraper for Gmail, you wouldn't even get through the login process with your script. Gone are the days when hobbyists could simply download websites quickly with a curl command in order to machine-process their content.

0 kommentar(er)

0 kommentar(er)